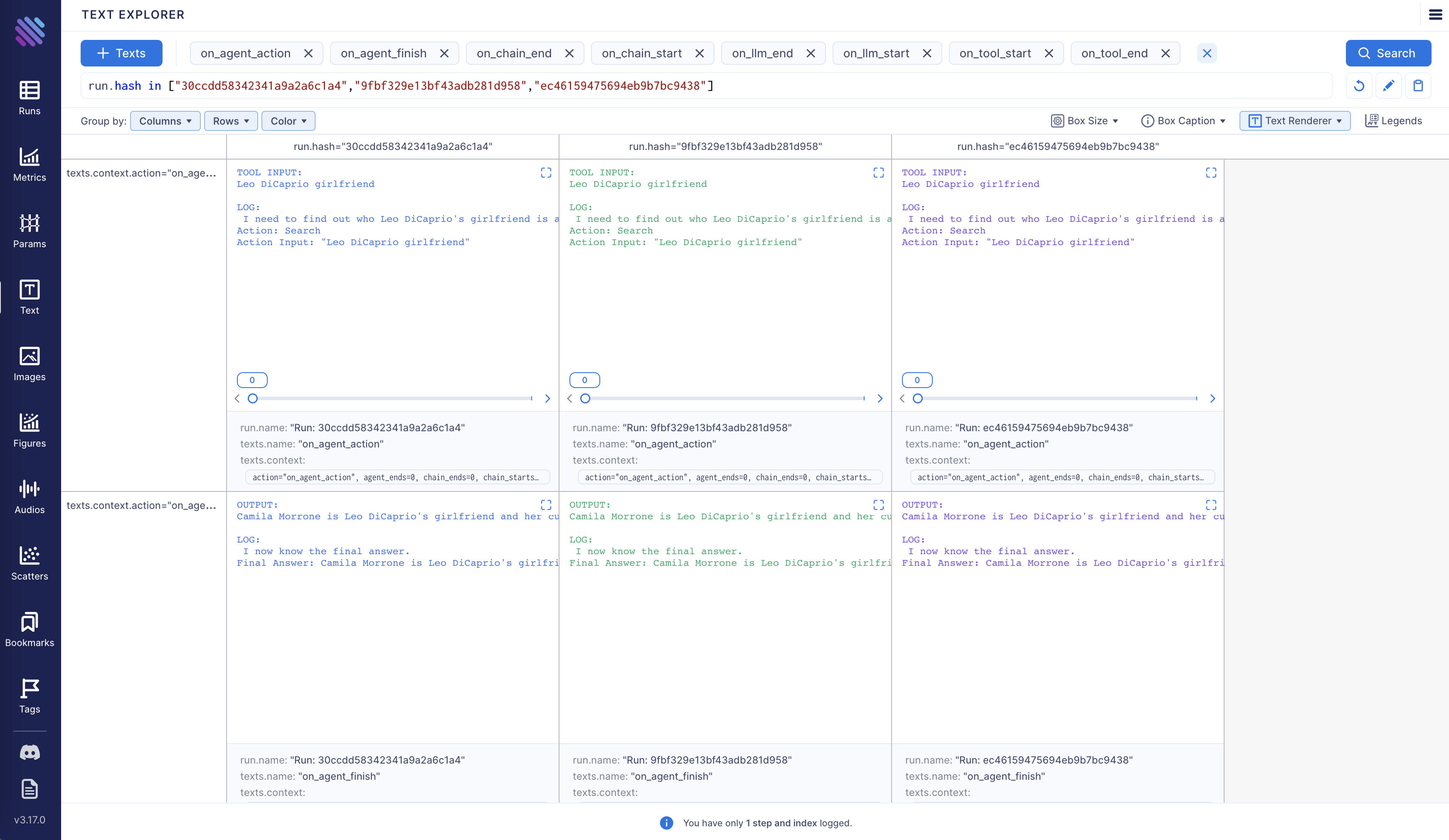

Additionally, you have the option to compare multiple executions side by side:

Additionally, you have the option to compare multiple executions side by side:

Aim is fully open source, learn more about Aim on GitHub.

Let’s move forward and see how to enable and configure Aim callback.

Aim is fully open source, learn more about Aim on GitHub.

Let’s move forward and see how to enable and configure Aim callback.

Tracking LangChain Executions with Aim

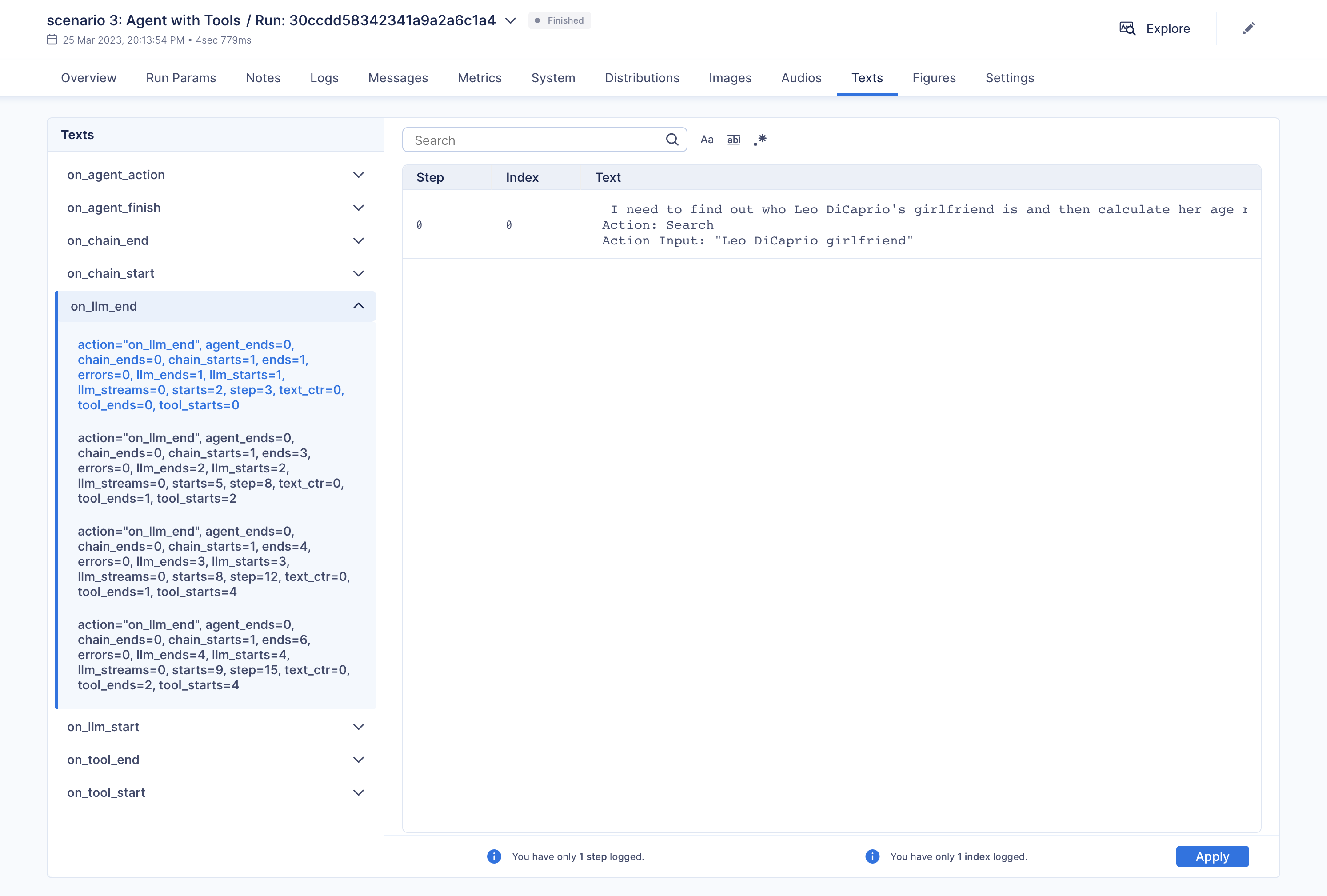

In this notebook we will explore three usage scenarios. To start off, we will install the necessary packages and import certain modules. Subsequently, we will configure two environment variables that can be established either within the Python script or through the terminal.AimCallbackHandler accept the LangChain module or agent as input and log at least the prompts and generated results, as well as the serialized version of the LangChain module, to the designated Aim run.

flush_tracker function is used to record LangChain assets on Aim. By default, the session is reset rather than being terminated outright.